caffe-yolo summary

本博文记录博主对caffe的初步理解以及yolo在caffe上的运行

一、数据处理篇

1.1 Dataset转化为LMDB

如先前所做的总结,在这里再次强调一下,首先要将数据转化为LMDB或LEVELDB格式,再输入至caffe的数据输入层。而图片转化为LMDB格式时,其形状或维度含义为[heights, weights, channels] 。其代码(位于caffe/src/caffe/util/io.cpp)如下:

1 | void CVMatToDatum(const cv::Mat& cv_img, Datum* datum) {a CHECK(cv_img.depth() == CV_8U) << "Image data type must be unsigned byte"; |

而label文件对bounding-box的标记也从[Xmin, Ymin, Xmax, Ymax] 转化为[Xmid, Ymid, W, H],同时,对其进行了归一化操作;并将不同class转为对应的index(按照label_map进行`映射)。其代码(位于caffe/src/caffe/util/io.cpp)如下:

1 | void ParseXmlToDatum(const string& annoname, const map<string, int>& label_map, |

1.2 DataLayer

yolo网络训练、测试时所用的DataLayer是BoxDataLayer,该数据输入层是由caffe-yolo原作者编写。这里做一下简单的代码分析:

1 |

|

1.3 Input与预处理

在进行图像预处理时,可以使用去均值操作,其目的是使得像素值更接近(0,0,0)原点,从而加快收敛速度。如果在数据层加入去均值操作,预测时也需要进行去均值操作。如无,则无需!其方法如下:

1 | //(104,117,123)为imagenet均值,可自行根据数据集生成均值。 |

同时,图像预处理的目的之一是保证输入数据与网络输入层所要求的shape保持一致。通过opencv.imread(img_path)函数读取的图片为(heights, weights, channels)。而deploy.prototxt中的input层为(channels, heights, weights)。因此,在进行预测时需要对输入图片进行预处理。

1 | im = cv2.imread(im_path) |

而转化为graph文件后,其网络输入层为(heights, weights, channels),附件为转为graph文件后的网络结构图:

点击查看或下载

因此,无需对输入图片进行预处理:

1 | im = cv2.imread(input_image_path) |

二、caffe简介

2.1 Project结构

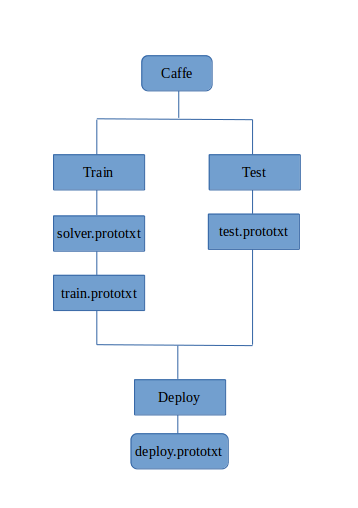

在caffe架构下搭建网络是通过prototxt文件描述的,以此建立统一的参数管理机制。在prototxt文件中,不仅包含基本的网络结构,还包含Loss层(Train时需要)、输入数据的路径和结构(Train与Test时需要)、输入数据size/ shape(如1601603,Deploy时需要)。因此,不同于keras,caffe的网络结构文件需要多个。

首先,solver.prototxt(即求解器)的主要功能是设置超参数,确定优化方式;其次, train.prototxt与test.prototxt的主要功能是搭建网络结构,设置结构参数用于训练与测试,确定loss层;最后,deploy.prototxt的主要功能是搭建最基础的网络结构用于预测。

2.2 网络结构

deploy.prototxt内容如下:

1 | name: "tiny-yolo" |

三、Tiny YOLO

Tiny-YOLO是YOLO算法的简单实现。相比于YOLO算法,它的网络结构更浅,仅有9层。除此外,其理论基础与YOLO并无二致。

3.1 Yolo Innovation

YOLO算法首创的实现了端到端的目标检测算法,是速度惊人、准确度较好的one-stage算法。YOLO算法将整张图片划分为SXS的grid,采用一次性预测所有格子所含目标的bounding-box、confidence以及P(object)和P(class|object)。

网络的输出结果为一个向量,size为:S * S * (B * 5 +C)。其中,S为划分网格数,B为每个网格负责目标个数,C为类别个数。其含义为:每个网格会对应B个边界框,边界框的宽高范围为全图,而中心点落于该网格;每个边界框对应一个置信度值,代表该处是否有物体及定位准确度(即Confidence = P(object) * IOU(predict-box, ground-truth)。);每个网格对应C个概率,分别代表每个class出现的概率。

而YOLO是如何实现对输入图像的分格呢?

原作者巧妙地在最后预测层设置了S * S * (B * 5 +C)个神经元(该层为全连接层,在yolo2中该层为1*1的卷积层),通过训练将对应不同网格的ground-truth收敛到对应的网格的输出中。

3.2 Loss

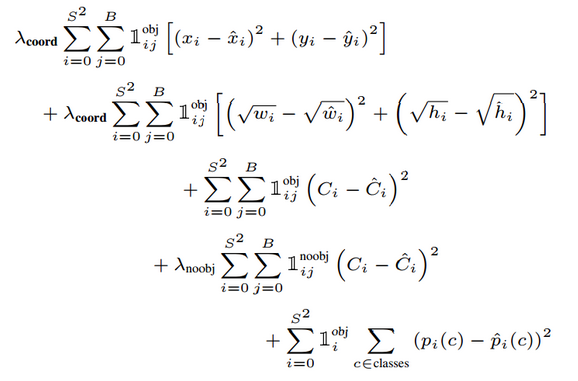

损失函数的设计目标就是让坐标(x,y,w,h),confidence,classification 这个三个方面达到很好的平衡。简单的全部采用了sum-squared error loss来做这件事会有以下不足:

首先,(num_side*4)维的localization error和(num_classes)维的classification error每一个维度产生的代价同等重要,这显然是不合理的。

其次,如果一些栅格中没有object(一幅图中这种栅格很多),那么就会将这些栅格中的bounding box的confidence置为0,相比于较少的有object的栅格,这些不包含物体的栅格对梯度更新的贡献会远大于包含物体的栅格对梯度更新的贡献,这会导致网络不稳定甚至发散。

因此,YOLO采取了更有效的Loss函数。将loss函数分为3部分:第一,坐标预测是否准确(图片中书写有误,xy值与groundtruth应相减不因相加);第二,有无object预测是否准确;第三,类别预测。

更重视8维的坐标预测,给这些损失前面赋予更大的loss weight, 记为 λcoord ,在pascal VOC训练中取5。对没有object的bbox的confidence loss,赋予小的loss weight,记为 λnoobj ,在pascal VOC训练中取0.5。有object的bbox的confidence loss 和类别的loss 的loss weight正常取1。

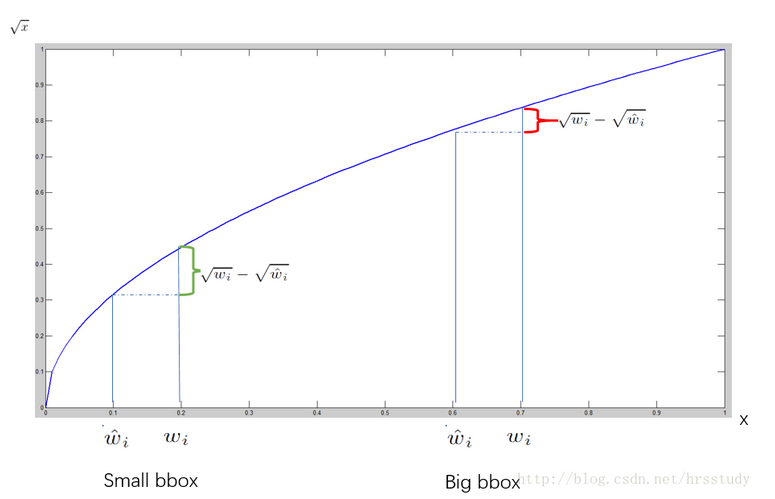

对不同大小的bbox预测中,相比于大bbox预测偏一点,小box预测偏相同的尺寸对IOU的影响更大。而sum-square error loss中对同样的偏移loss是一样。为了缓和这个问题,作者用了一个巧妙的办法,就是将box的width和height取平方根代替原本的height和width。 如下:small bbox的横轴值较小,发生偏移时,反应到y轴上的loss(下图绿色)比big box(下图红色)要大。

四、Train && Test

4.1 Optimization

本项目测试过SGD、momentum 、Adam。最终,Adam效果最佳。

4.2 solver.prototxt(Adam)

1 | net: "x_train.prototxt" |

4.3 train.prototxt

五、Predict

1 | #!/usr/bin/env python |